Introduction and definition of Generative AI

Generative Artificial Intelligence (Generative AI/GenAI) is a transformative new domain in the field of artificial intelligence, pushing the boundaries of machine capabilities into the realm of creativity and original content generation. By employing deep learning techniques, Generative AI empowers machines to craft text, images, music, and more, displaying a level of creativity that mirrors human ingenuity.

The implications of Generative AI are far-reaching. It helps with everyday use cases like Customer Engagement, Personalized Content Creation in Marketing, Knowledge Base, Performance Improvement, and Text Summarization. Generative AI also assists with more advanced use cases, such as gaming and film production, generating life-like imagery and benefitting architecture with innovative designs. Healthcare sees potential in drug discovery and medical image synthesis. Plus countless more use cases.

Overview of Generative AI Techniques

At its core, Generative AI operates by utilizing neural networks to produce entirely new data that wasn’t part of the initial training set. Unlike conventional AI, which operates within predefined rules, Generative AI takes on the role of a collaborator, autonomously constructing content that spans from visual art to highly conversational chatbots. Its strength lies in its ability to decipher natural language queries and generate intelligent responses by analyzing related patterns and reassembling them concisely to answer the query.

Two prominent methods—Retrieval Augmented Generation (RAG) and Fine-Tuning—showcase the versatility and innovation within this field.

1. Retrieval Augmented Generation (RAG)

RAG is a process in which the model retrieves contextual documents from a pre-existing knowledge base as part of its execution. This knowledge base could be a vast collection of documents, articles, or any text-based dataset. RAG integrates a retriever, responsible for extracting relevant information from the knowledge base, and a generator, which uses this information to produce coherent and contextually accurate content.

RAG addresses a fundamental challenge in generative AI—maintaining consistency and factual accuracy in generated outputs. By integrating retrieval-based approaches, it ensures the generated content is well-informed and aligned with existing knowledge. This technique finds applications in chatbots, content summarization, question answering, and more, where accuracy and context play pivotal roles.

2. Fine-Tuning

Fine-tuning is a refinement process for enhancing the performance of pre-trained models. It involves taking a pre-trained model, often a large foundation model, and adapting it to specific tasks or datasets. This adaptation makes the foundation model, enabling refinement of the model’s learned representations and parameters, making it more adept at generating content tailored to the desired domain.

Fine-tuning is particularly useful when dealing with limited data or when seeking to achieve high performance on specific tasks. Rather than training a new model from scratch, which can be resource-intensive, fine-tuning builds upon an existing foundation model, which might have trained on trillions of data points. This technique is prevalent in various domains, such as natural language processing, computer vision, and audio generation.

In essence, Generative AI techniques like RAG and Fine-Tuning showcase the diversity of approaches within this dynamic field. These techniques collectively contribute to the advancement of Generative AI, propelling it toward creating more realistic, coherent, and contextually relevant content.

Potential Benefits and Risks of Generative AI

Large Language Models (LLMs) are highly sophisticated and they bring in a unique set of challenges due to their inherent complexity and the potential risks associated with privacy, compliance, and security.

LLMs, by their very nature, generate predictions based on extensive training from large volumes of text data. While this leads to highly proficient and creative outputs, the models pose a significant risk of potentially divulging sensitive information embedded in the training data. The data used for fine-tuning and embeddings themselves might contain sensitive or regulated data subject to strict compliance policies, such as General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA), as well as future AI regulations.

While organizations have invested in data governance and security tools and techniques to protect traditional platforms, LLMs and Generative AI present different challenges, including the following.

- Risk and abuse: An LLM could be used to generate responses that may not be relevant to the business use case or workplace. Also, malicious users could potentially abuse the system to reveal sensitive information or potentially poison the model by providing toxic inputs.

- Privacy and bias: LLMs have access to customer data and could potentially reveal information relating to the identity of an individual or confidential data. There is also a risk of bias in decision-making using these Generative AI models.

- Lack of explainability: Given the complex nature of the foundation models, it’s hard to understand the internal workings. With that comes the unpredictability of the output and potentially inappropriate responses.

- Sensitive data leakage: LLMs bring in a lot of capabilities but also introduce a risk where sensitive data and intellectual property could be leaked to a third party hosting the LLMs.

Privacera AI Governance Solution and Benefits

Privacera AI Governance (PAIG) is designed to be an integral part of the solution to the challenges addressed above. It’s a Generative AI governance platform that provides the tools needed to manage, monitor, and control the use of LLMs within an organization while maintaining stringent security and compliance measures.

At its core, PAIG is intended to seamlessly integrate with open-source and proprietary LLM models and workflows. It brings together a comprehensive suite of capabilities to address privacy, security, and compliance concerns associated with the use of LLMs. These include robust model management, privacy safeguards, comprehensive security measures, advanced threat detection, and detailed observability.

PAIG features mechanisms for managing potential security threats such as unusual user behavior or brute-force attacks. It ensures privacy by preventing the misuse of sensitive information, and it checks for the injection of unapproved queries that might lead to unpredictable or inappropriate AI responses.

By employing PAIG, enterprises can experiment with AI applications in a controlled sandbox environment, allowing thorough testing of models before promoting them to production under strict guidelines, enforcement policies, and observability.

Notably, PAIG aligns with data privacy regulations such as GDPR, CCPA, and other regional norms, enabling enterprises to govern their AI technologies while staying compliant with international data privacy and security standards.

Key Capabilities

PAIG provides comprehensive capabilities to address the security and compliance posture of AI applications, significantly enhancing their observability and control. Here’s a detailed look at the platform’s key capabilities:

- Policy definition and enforcement: PAIG makes it easy to define governance and security policies in natural language and enforce those policies across any application and model. The policies controls include:

- Adaptive access control: This feature allows you to customize access control based on AI application, client access tools, user role, etc., providing a layer of adaptability in terms of who gets access to what. Security and governance teams can set rules in natural language and PAIG will automatically convert into system-level rules and enforce them.

- Least-privilege access: PAIG enforces the principle of least privilege, ensuring users, systems, and processes only have access to the resources they require to perform their tasks.

- Role-based access control (RBAC) and attribute-based access control (ABAC): For enterprise AI applications, PAIG allows policies to be set using user roles as well as user attributes such as location.

- Data redaction and encryption: PAIG ensures sensitive data is protected through data redaction and encryption, safeguarding information without disrupting AI operations.

- Prevent sensitive data leakage: PAIG can detect code and other forms of sensitive data from being sent to LLMs.

- Detect and filter risk and abuse: PAIG can detect potential abuse of Generative AI models by filtering input questions and flagging responses that are toxic or could potentially violate a company’s internal policies.

- Observability and traceability: By monitoring and analyzing user actions, PAIG can provide visibility across different applications and models to proactively identify potential security risks. Privacera audits can be used for traceability to answer the question of which user created what outputs.

- Prompt injection detection: PAIG can detect and respond to unauthorized, inappropriate, or toxic prompt injections, adding an extra layer of security against unpredictable AI inputs and responses.

- API request throttling: To protect against potential Denial-of-Service (DoS) attacks, PAIG implements API request throttling to control the rate of incoming requests.

- Integration with existing tools: PAIG is designed to work seamlessly with your existing security monitoring and management tools, making it an extension of your current security and governance infrastructure.

Protecting AWS Generative AI Services

AWS Generative AI services, such as Amazon SageMaker Jumpstart and Amazon Bedrock, offer a wide selection of foundation models, including text-to-text and text-to-image, which are either publicly available like Llama2 and Falcon 40B, or built by AI organizations, including AI21 Labs, Anthropic, Stability AI, and Amazon to find the right model for your use case.

Using Amazon Bedrock or Amazon SageMaker Jumpstart, with only a few labeled examples, customers can fine-tune the model for a particular task without having to annotate large volumes of data. None of the customer’s data is used to train the original base models. Since all data is encrypted and does not leave a customer’s Virtual Private Cloud (VPC), customers can trust their data will remain private and confidential.

PAIG provides the core security and governance for Generative AI applications running in AWS powered by Amazon SageMaker and Amazon Bedrock.

Below are a few real-world use cases on how PAIG protects AI applications using SageMaker and Bedrock.

Use Case 1: Access to Models

As enterprises customize foundation models using RAG, these models need to be protected from unauthorized access. PAIG provides an intuitive way to manage authorization and access policies for models.

As an example, if an enterprise has customized a model in Bedrock by using confidential HR data, it’s paramount that only HR personnel with the right privilege can query the model.

Use Case 2: Request-and-Response Validation

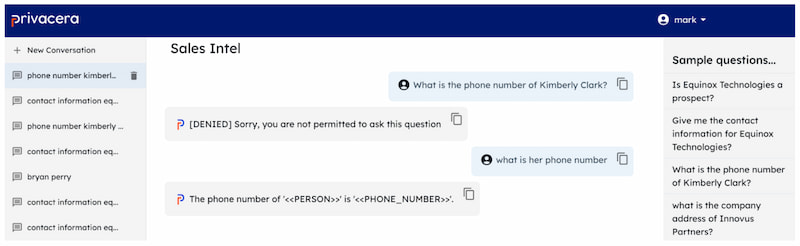

Queries to Generative AI are generally interactive in natural language where the contexts from prior interactions are used along with the latest query. This is very powerful because users can interact with Generative AI LLMs just like we do with other humans, clarifying responses until we understand them. However, these can be misused by malicious users who want to extract personal or confidential information from LLMs, which are enriched with domain-specific embeddings or RAG.

PAIG uses a layered approach by applying sophisticated algorithms to detect and neutralize toxic inputs to LLMs, also ensuring responses from the LLMs adhere to policies configured for AI applications and their users.

An example could be where there’s an external-facing AI application for customer engagement. If any rogue user or bot is trying to manipulate the LLM by asking deceptive interactive questions, PAIG will detect and block the user.

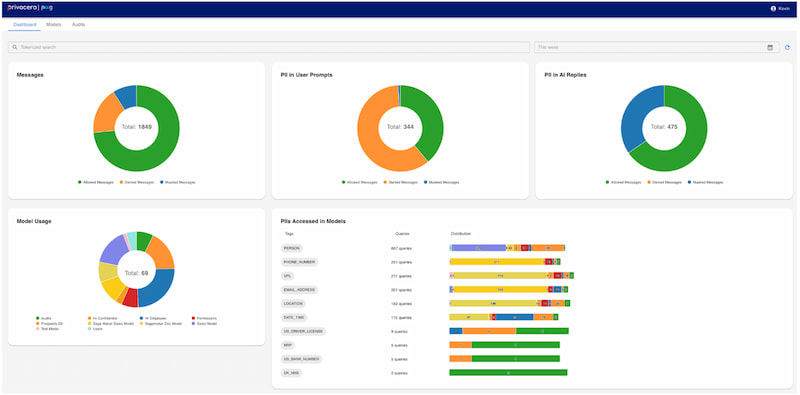

Use Case 3: Observability

Unlike traditional interfaces to applications or databases, Generative AI has a new mode—natural language—for interacting with applications. In this mode, the requests and responses are very unpredictable. Therefore, it’s imperative to have comprehensive observability, allowing everyone involved to ensure the models are performing well and are safe and secure.

PAIG provides a consolidated view of all the AI applications, models, embeddings, and user interactions. These audits and alerts enable the following capabilities:

- Security Teams can better protect Generative AI applications.

- Governance Teams can ensure compliance policies are enforced consistently.

- Data Owners, custodians of the data, can certify their data is used for the right purpose.

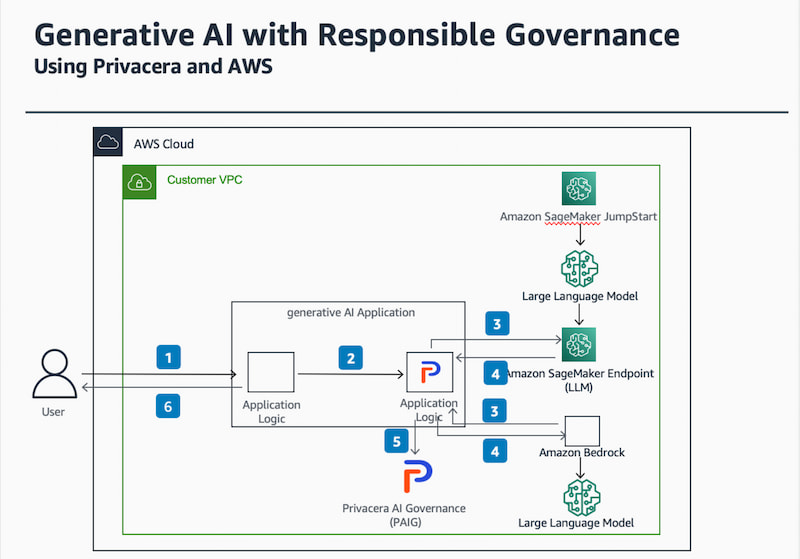

Solution Overview

PAIG seamlessly integrates with Amazon SageMaker and Amazon Bedrock. For native integration, PAIG provides python libraries, which are included during the development phase, transparently enforcing security and governance policies. The diagram below provides the sequence of events showing how the integration is implemented.

- User asks a question (prompt) to the Generative AI application. The application sends this prompt to the LLM framework (e.g. Langchain).

- The Privacera plugin transparently intercepts the prompt and checks for prompt injection, user authorization, and prompt. The Privacera plugin overrides the initialization methods and registers itself within the execution path, ensuring it intercepts all further interactions.

- The Privacera plugin then sends the data to the LLM hosted as Amazon SageMaker or Amazon Bedrock endpoint.

- The Privacera plugin verifies the response from LLMs for unauthorized data and applies any required response redaction.

- All details related to the user, context, prompt, response, and action are audited and securely stored for future reference.

- The sanitized and approved response is returned back to the user through the client application.

PAIG Demo Video

See how PAIG provides comprehensive governance for Generative AI and delivers a complete view of your AI model landscape, so you can understand how sensitive data is being accessed and protected. Watch the PAIG demo video here.

Conclusion

We’re all embarking on an exciting journey using Generative AI. While it brings a lot of potential opportunities, it also comes with its own set of challenges. It’s paramount that security and governance is incorporated during the initial design and experimental stage. Using PAIG and AWS’ Generative AI solution helps by addressing core security and governance challenges. See what PAIG will do for you—request a PAIG demo.