Request a Demo Today to See The Benefits of Data Security For Your GenAI App

The Data Security, Privacy and Safety Guardrails for GenAI Based on Open Standards

You have started kicking the tires on building your first GenAI app or chatbot but concerns for data security and safety are blocking this from production deployment.

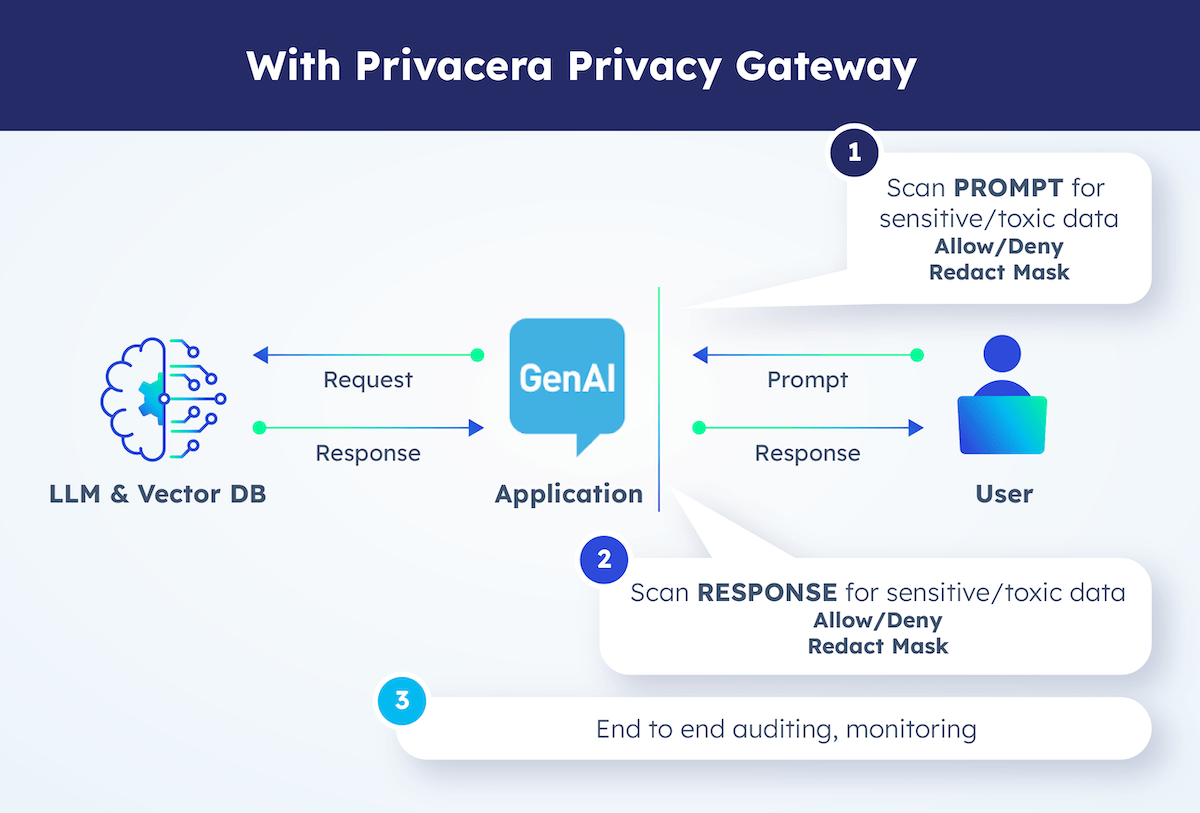

PAIG (Privacera AI Governance) can help you accelerate your GenAI journey. Built on open standards that are agnostic to your choice of foundational models, vector databases or libraries, PAIG integrates with your app to provide a real time security gateway and safety guardrails to ensure confident deployment of your app. Request a demo to see how we’ll help you with both.

See critical GenAI security and governance capabilities in action:

- Protect training data from the widest range of structured and unstructured data sources before it gets into your LLM and vector databases.

- Create role, attribute and tag-based policies to manage what data can be used for training or fine tuning models and embeddings.

- Fine grained access control, classification and data filtering of vector database or RAG (retrieval-augmented generation) access requests.

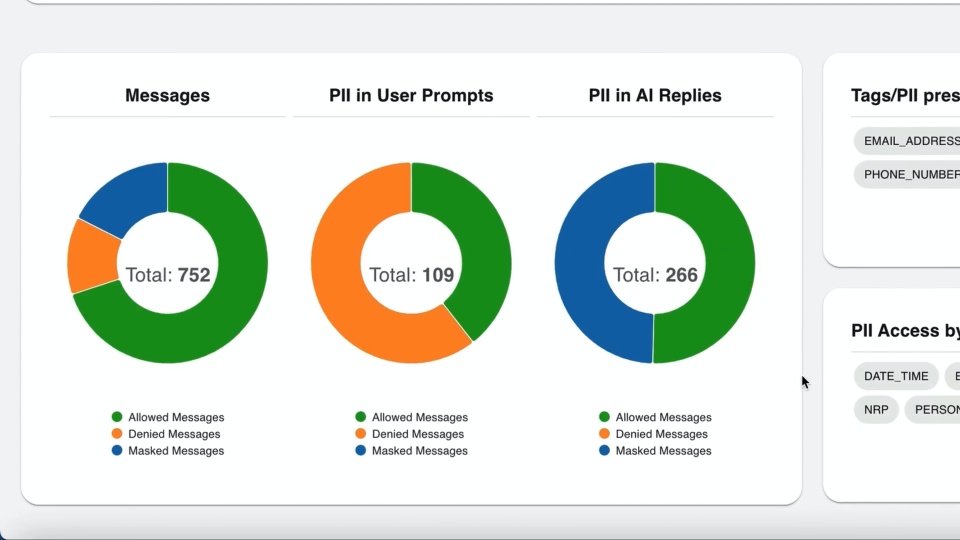

- Automatically scan, redact, block or allow sensitive or unauthorized data in prompts or responses in real-time based on user privileges.

- Continuous auditing and observability to understand all app and model access, presence of sensitive data and usage patterns.

- Easily integrate the PAIG agent into your GenAI app and connect to LangChain, Python and others to ensure data security regardless of your LLMs or vector database choices.

You’re expanding the data in your GenAI models and vector databases, increasing the potential business value of your app, but increasing the risk of unintended data leakage. Get in control of data security for GenAI today! Request your demo now.

Request a PAIG Demo

Privacera is trusted by Fortune 500 companies