Building a secure foundation for GenAI excellence

The world is buzzing with generativeAI (GenAI) pitches and promises. In fact 94% of business executives surveyed by Deloitte indicate they believed GenAI to be critical to their own organization’s success. But there are dark clouds surrounding this desire. According to multiple organization’s research, 55% of organizations are concerned about data management and governance. Even Salesforce has found in its own research of 150 CIOs from companies with 1,000 or more employees, the slow pace of implementing enterprise-wide AI strategies is largely due to the preparatory work CIOs believe they must prioritize first. While there is enthusiasm for AI’s potential, CIOs face significant hurdles, with security concerns and data quality issues topping the list. Security or privacy threats and a lack of trusted data rank as their biggest fears.

At the same time, Gartner suggests that by 2025 30% of GenAI projects will be abandoned after the POC (proof of concept) stage and that 3 of the top four issues relate to security, risk, and governance. And the sobering thought (drum roll please) – these projects on average are believed to cost between $5m and $20m in upfront costs.At the same time, Gartner suggests that by 2025 30% of GenAI projects will be abandoned after the POC (proof of concept) stage and that 3 of the top four issues relate to security, risk, and governance. And the sobering thought (drum roll please) – these projects on average are believed to cost between $5m and $20m in upfront costs.

What’s with the weird title you ask?

The title was born during a series of conversations I had with a good friend and advisor. For context, these took place during the Olympics in Paris this year. The question we raised was WHO WANTS TO BE AVERAGE? In anything? No one sets out to be average. Everyone wants to be a winner, and when the stakes are as high as they are in GenAI then so much more – why on earth would you want to be average?

As an athlete, you know what it takes to be a winner or a leader. It takes planning and a long term commitment to excellence over a long period of time if you want to go to the olympics.

My belief is that we can draw parallels between building a winning mindset for sports and building a foundation for excellence in your business – in this case for your GenAI initiatives.

Patterns emerge for leaders in GenAI

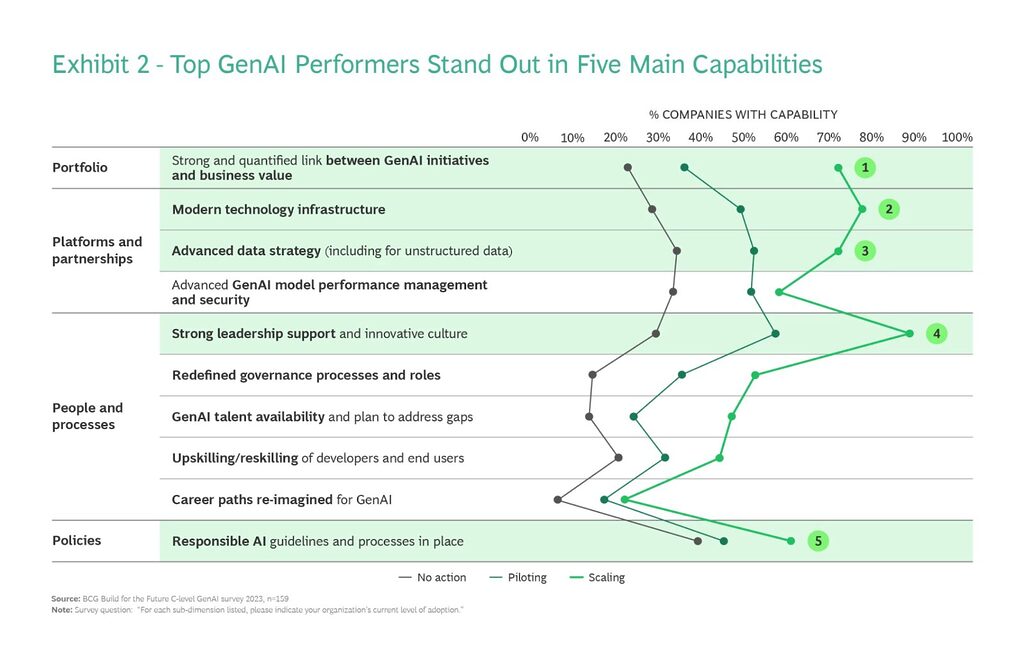

BCG did a survey recently on the state of GenAI adoption and they found that around 10% of respondent organizations are what they classified as SCALING. i.e. they are getting real value and are scaling their GenAI initiatives. I would call these our LEADERS. According to the survey 50% are in the middle – PILOTING GenAI in some fashion. Maybe these are our AVERAGE ones.

What is really interesting is that 5 distinct capabilities come to the front where the SCALING organizations excel!, Privacera’s platform offers a comprehensive solution that balances security with business agility.

Focusing on the green rows, these are focused on:

- Linking the GenAI initiative to some business outcome and value

- Modernizing tech infrastructure

- Data strategy

- Leadership buy-in

- Responsible AI

The LEADERSHIP BUY-IN and tying the initiative to business outcomes seem very obvious and most of us know that failing to deliver business value will kill any technology (and other) initiative.

I will focus the rest of this blog on the remaining three that I believe are crucial building blocks for a secure foundation – one that will set you up for repeatable excellence and scaling.

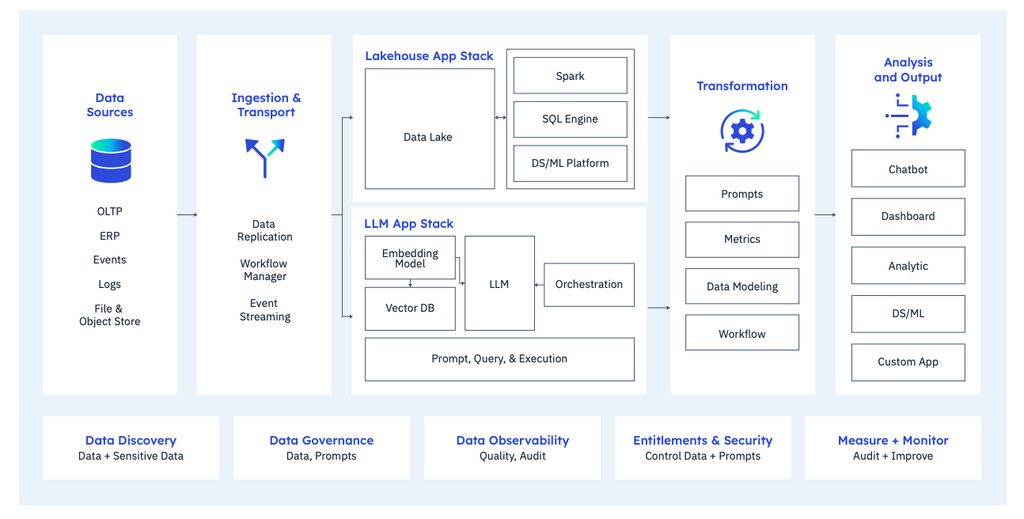

Modern technology and data infrastructure

I will combine the aspects of technology and data modernization into one group since I don’t believe it makes sense to separate them. Andreessen Horowitz introduced this reference architecture for the modern data and technology stack some years ago. It provides a very comprehensive view of all the distinct services and flows that exist across the entire data value chain. One interesting observation is that they highlight a series of services at the bottom of their diagram that are transversal to the rest – i.e. these are services to consider holistically and not to be implemented as silos in each service or flow. These services include Data Discovery, Data Governance, Observability, Entitlements and Security.

I propose this framework is a power starting point as you consider modernizing you technology infrastructure in support of GenAI.

We have taken a little liberty by expanding the original to include the aspects of GenAI in this model below.

You can read a more detailed explanation of the thinking in this whitepaper Rethinking the Modern Data Stack For The Age Of GenAI. The key aspects from this in my view:

- The emerging GenAI data estate will ultimately have to be governed in a unified manner along with the rest of your analytical data estate.

- Likewise, the same notions of transversal data governance, security, discovery and monitoring and measuring (which we included since it was missing from the original) will need to apply.

Responsible and Secure GenAI

Building a foundation for secure and responsible GenAI has two levels. (1) Your organization’s policies and guidelines as it relates to the use of GenAI and (2) the implementation of those policies and guidelines as systematic and automated controls across your technology and data stack. For the first, you probably have this in place or are in the process of putting that in place. The second is a little harder and requires a deeper understanding of the inner workings of GenAI.

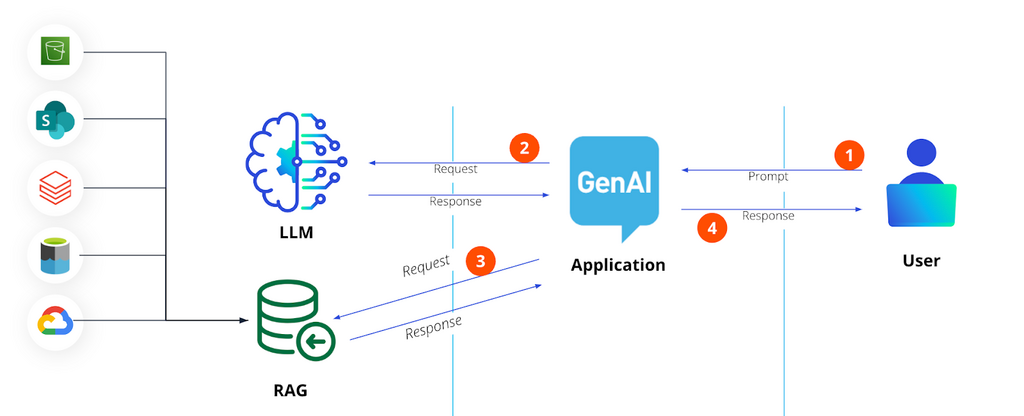

At its most basic level, the GenAI application (like a chatbot you might build to support your customer support agents) will consist of the model (eg. OpenAI), some application that is orchestrated through the use of some libraries like Langchain. And then there is the part where you store your own company relevant data – usually in some vector database. The flow is pretty straight forward:

- The user ask a question (eg. Is Equinox Technologies a customer?}

- The application pass that to the LLM for help with simplification and validation

- That reframed question is then sent to the RAG (retrieval augmented generation) process to return the context records. This usually then gets passed back to the LLM for the final and best answer.

- The answer is sent to the user on the screen.

At every interaction there are risks for security and safety issues arising.

- What if the user enters a social security number into the prompt and now that is in the system?

- What if the user inputs something toxic or try to get some toxic response?

- What if the user is from marketing and should not see any contact details for Equinox Technologies?

- What if the LLM and RAG process returns sensitive data in the response and the user should not be allowed to see it?

To further exacerbate the problem, most applications now will contain multiple models and multiple agents or RAG processes.

What do the AVERAGE projects get wrong?

Based on the conversations we have had with nearly a 100 organizations over the past 12 months, a couple of things are apparent.

- The pilot project usually has a small scope and often can be done using a single model. The result is that the technology stack is pretty n arrow and more than likely simply following a single cloud provider’s pattern.

- Most start with public data and do not think of security and governance issues and if some toxic or PII data shows up they use the guardrail embedded in the cloud provider

The problem with that is the use case grows and now sensitive or restricted company data gets included. And then they find the possible questions are not simple one liners like “Wis Equinox Technologies a customer”, but rather “Does our ABC product support access to Amazon EMR and when will we provide support for EMR Serverless?” When this happens the project grinds to a halt. And the project team is suddenly trying to invent governance and security that go far beyond the realm of the embedded guardrails.

A more comprehensive GenAI security and safety approach

- Govern the end to end data lifecycle: Building a more comprehensive framework would need to be able to manage, secure and safeguard the entire lifecycle of data from training all the way to every interaction.

- Policy based controls: Security and safety controls need to be systematic and also be able to adapt to the user or role accessing the system. Failing that will require multiple versions of the same app to partition restricted data from the rank and file users.

- Open framework: Extensible framework to plug specialized (and diverse) guardrails and libraries to provide specific controls that might be required for your specific use case and applications.

Introducing Privacera AI Governance (PAIG)

Privacera AI Governance (PAIG) is a comprehensive GenAI security, safety and observability solution that works independently from your models or RAG technology choices. PAIG is designed on an open and extensible framework to be orchestrated into your application to provide policy-based controls to secure access to sensitive information that might be in your models or RAG systems. PAIG manages all aspects of data security and safety across the entire lifecycle, including:

- Secure Tuning & Training Data

- All training data from source systems scanned for sensitive data and, based on the application requirements, can be redacted, masked or removed. In addition, PAIG can tag all sensitive or restricted data during the vectorization process and insert those tags as metadata on each vector chunk during the vectorization process. In addition, original source permissions can be inserted as metadata for downstream usage for runtime filtering.

- Secure Model Inputs and Outputs

- All inputs (prompts) and outputs (responses by the application) are scanned in real time for restricted, sensitive or toxic data. Tagged data will then be restricted based on policy controls in place and the user identity to determine whether data should be allowed, redacted or blocked entirely.

- Access Control and Data Filtering for VectorDB/ RAG

- PAIG will filter RAG queries based on the user identity and permissions at runtime to ensure only permissible context records will be returned into the application.

- Comprehensive Compliance Observability

- PAIG will collect all usage data as well as classifications in real time from all your GenAI applications and provide a comprehensive dashboard and audit logs of what sensitive data is leveraged in each model, how it is protected, and who is accessing it

Start your journey to GenAI Excellence today

The potential business value and impact of GenAI is undeniable and perhaps existential for your organization. If you are in the process of piloting or perhaps already have an application in production, you can set yourself on a path for repeatable excellence today. Don’t delay your thinking about security, governance and responsible AI.

For a deeper dive, watch our webinar “How Not To Be Averand in GenAI”. If you want to get your hands on PAIG, you can try the open source version at www.paig.ai. Alternative, if you are interested in learning more about Privacera, reach out to our team and schedule a demo or conversation.