What is a RAG System?

As businesses increasingly adopt Generative AI (GenAI) for everything from content creation to data-driven decision-making, Retrieval-Augmented Generation (RAG) has become a powerful technique for combining the strengths of pre-trained language models with real-time data retrieval systems to improve the accuracy and relevance of generated text. In a typical RAG system pipeline, a generative model (such as OpenAI) queries external data sources, retrieves relevant information, and uses it to augment responses. This can dramatically improve the quality and relevance of AI-generated content, especially when working with proprietary corporate data.

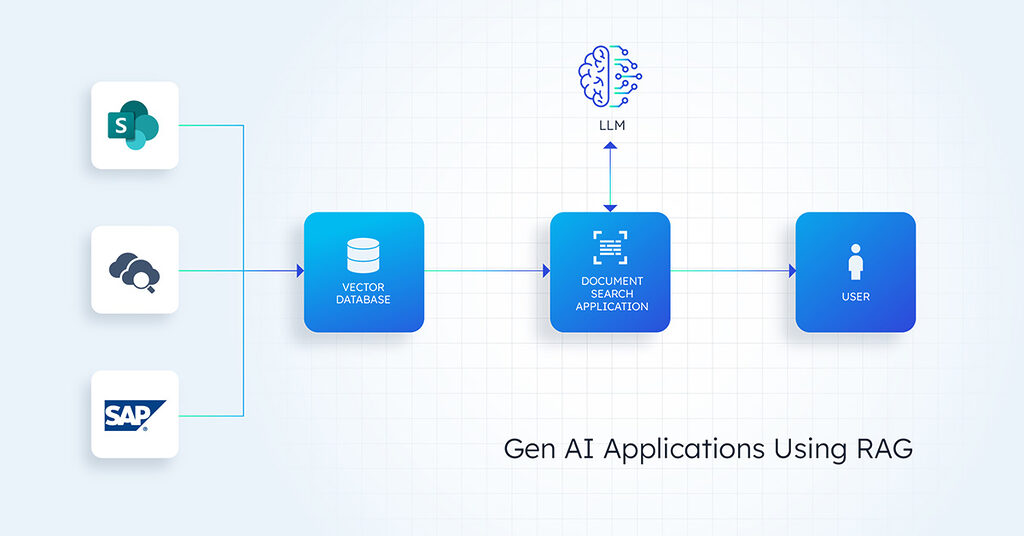

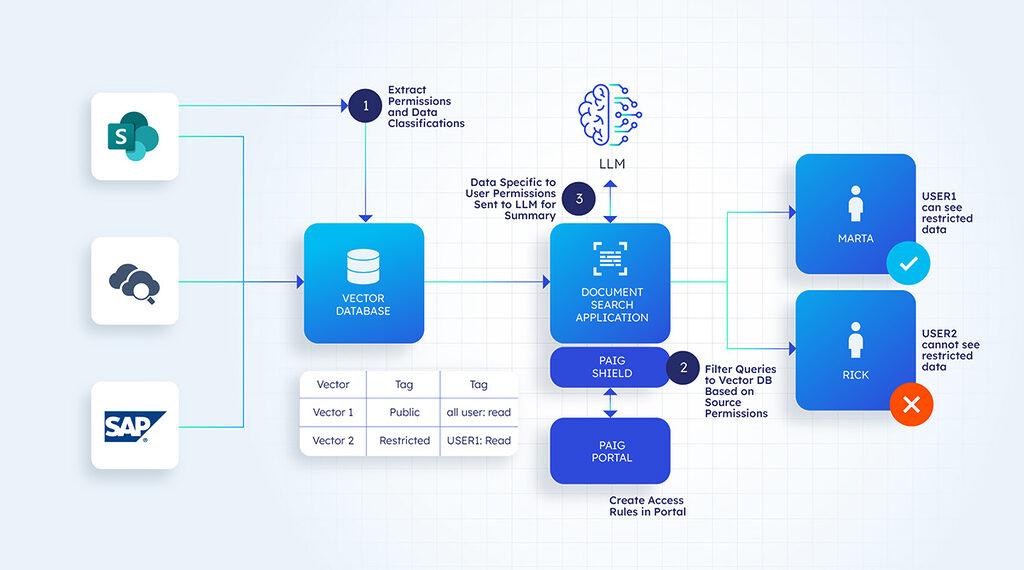

We will be using a RAG-based application example in this blog. The company in the example is building a document search application. The company leverages a vector database to store enterprise data from SharePoint, SAP, and other applications. Based on the user query, the application retrieves the appropriate data from the vector database and uses the LLM to summarize the data for the end user.

However, as powerful as RAG is, it also introduces significant challenges around data access control. Ensuring that the right users access the right data—especially in sensitive corporate environments—becomes a complex, high-stakes problem. The core issue is how to safeguard proprietary data while allowing AI models to retrieve, process, and utilize that data in a way that aligns with access policies, compliance regulations, and security standards.

RAG systems, which enhance AI models with external data sources, present unique security concerns. These systems often aggregate data from diverse sources such as Confluence Wiki pages, SharePoint, databases, and support tickets. Each of these sources typically has its own access controls, creating a complex web of permissions that must be maintained when data is used in GenAI applications.

Key Challenges in Data Access Control for RAG Systems

1. Dynamic Data Access in Real-Time Retrieval

In a traditional generative AI setup, the model may operate in a closed environment, only generating responses based on pre-existing training data. However, RAG implementations involve querying external databases or document stores for up-to-date or task-specific information. This real-time data retrieval introduces complexity when trying to apply granular access controls.

For example, an employee querying an AI system for information related to customer data may need access to specific documents from an internal database. Which is fine, but at the same time shouldn’t be allowed to query financial records or personnel files. In a RAG system, the model must perform dynamic queries against the data store, and the access control system needs to evaluate the user’s identity, query context, and permissions in real-time. The role-based access control is provided by Vector databases, but they lack awareness of the user context or the sensitivity of vector embedding.

2. Data Leakage via AI-Generated Outputs

A core benefit of RAG systems is that they combine generative capabilities with relevant, real-time data. However, this can lead to data leakage if the model inadvertently outputs sensitive information. This happens when generative models “memorize” or infer sensitive data during training, and produce an AI response for a user, who might not have direct access to that data.

For example, if a model retrieves proprietary internal reports or private emails and then generates content that paraphrases or summarizes them, this could lead to unintended exposure of confidential corporate data.

Challenge: Ensuring that sensitive or confidential data is not exposed through the outputs generated by the AI system.

3. Fine-Grained Permissions Across Data Sources

RAG systems often pull data from multiple sources: structured databases, unstructured document stores (e.g., SharePoint, Google Docs), or even third-party APIs. Each data source may have different access policies and varying sensitivity levels. There might be several users in a corporate environment, each requiring different access levels to various data sources – resulting in operational overhead and complexity.

4. Model Training Data and Access Restrictions

RAG systems rely on pre-trained models, which are then fine-tuned or adapted to specific data sets, including proprietary business data. In a corporate setting, ensuring that only authorized data is included in the training process is critical to maintaining security and compliance.

5. Real-Time Data Auditing

For organizations that must comply with stringent regulatory requirements (e.g., GDPR, HIPAA), auditing who accessed what data and when is a non-negotiable requirement. This becomes even more challenging in RAG implementations where data is accessed and used dynamically, sometimes involving multiple actors (users, AI systems, third-party data providers) in a single workflow.

6. Insider Threats and Misconfigurations

While much attention is given to external threats, insider threats—whether malicious or accidental—are a significant concern in RAG systems. Human errors, such as misconfiguring access policies or granting overly broad access, can lead to significant security breaches. In addition, users with elevated privileges (such as data scientists or AI engineers) may unintentionally expose sensitive information during experimentation or model training.

How does PAIG help with fine-grained access control in RAG systems?

PAIG provides an easy, robust framework for companies to build context-based access control into the RAG implementations.

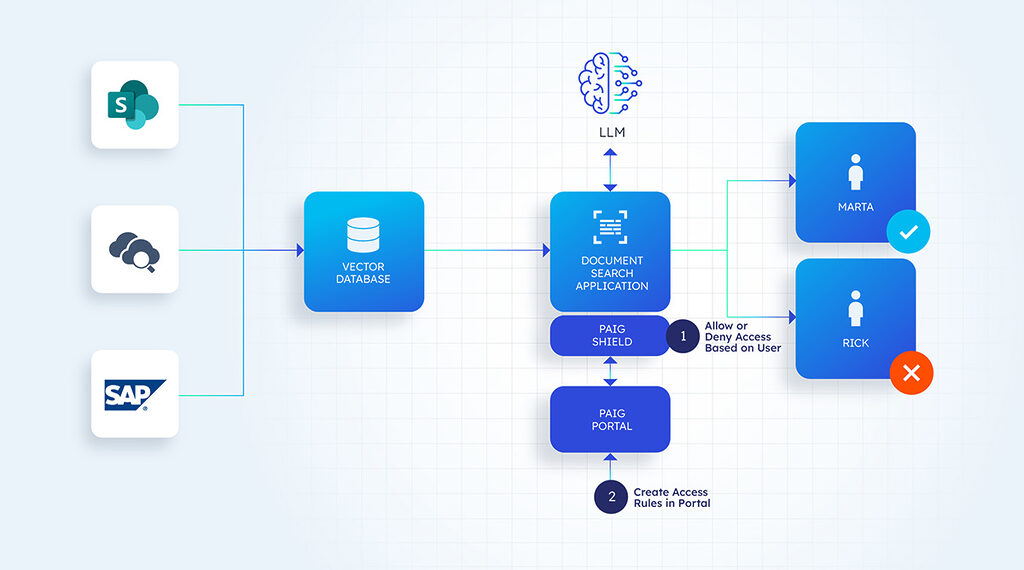

We would continue with the earlier example of a company building a document-based search. PAIG integrates quickly with applications built on Langchain. PAIG has a few components that help in this process. Easy-to-use PAIG UX portal allows users to set policies and collect audit activities. PAIG shield plugs into Langchain and is invoked as part of the user conversation flow. You can find more details here.

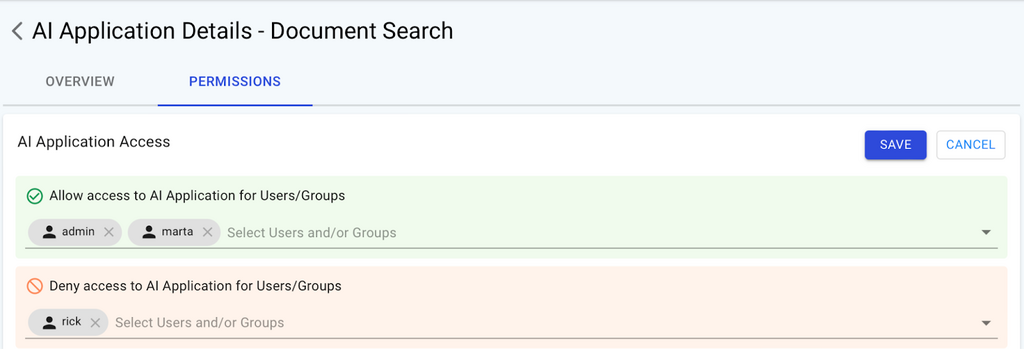

1. Access control to specific applications.

Once installed, the PAIG portal can be used to build policies. Applications can be created in the PAIG portal, and policies can be set within the application.

Once set up, the PAIG shield is invoked in the conversation flow and can allow or deny users based on the policies.

2. Context-based data filtering

Access control to specific applications is a good start, but it is not comprehensive.PAIG excels in helping companies build context-based access control and carrying forward existing security classification and policies into the Gen AI example.

For example, let us assume the documents in Sharepoint have specific data classification and security rules on who can access specific documents. PAIG provides libraries to help carry forward the data classification and the access rules and embed them in the vector database as additional metadata.

At runtime, PAIG shield can modify the query to the vector database to fetch only the vectors/data the user can access in the source. Only user-specific data is sent to LLM for summarization and presented to the end user.

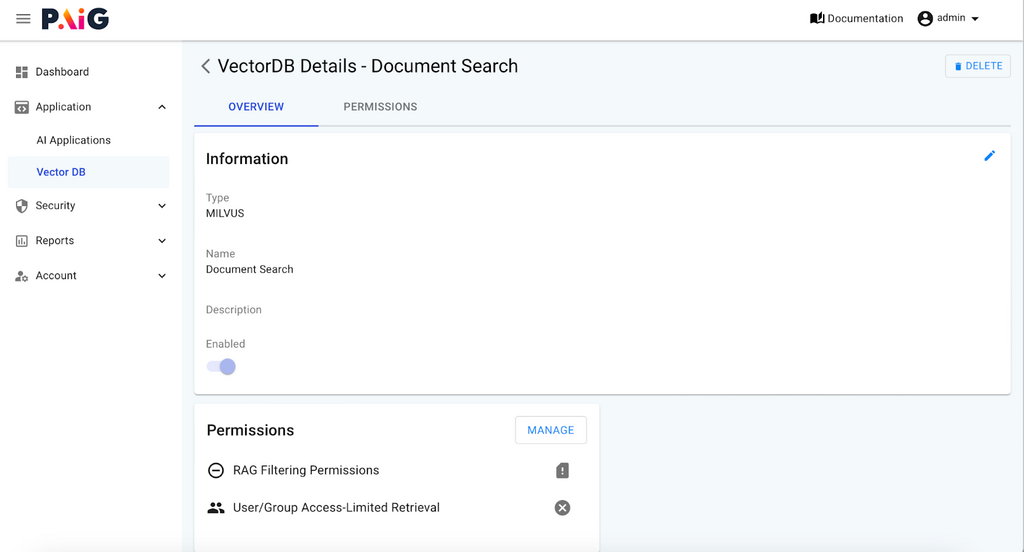

RAG filtering can be enabled by going to Applications→ Vector DB in the PAIG application. When enabled, PAIG will automatically leverage any source permissions stored in the vector DB to make decisions on which user can see what data.

More details on RAG filtering can be found here

3. Auditing and reporting

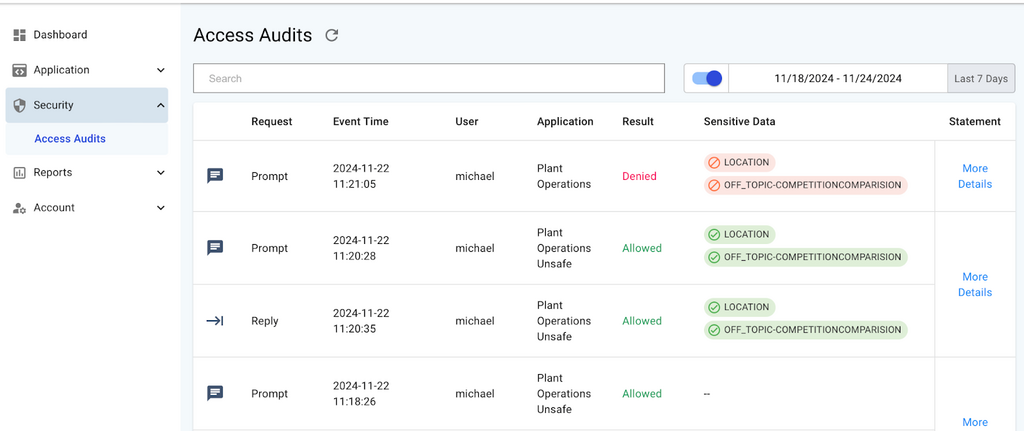

PAIG records the context of user conversation and interaction flow in one central place. Compliance and security teams can review the audits in one place. Developers can use the provenance to answer any questions from the compliance and security teams.

Audits can checked in the portal under security —> access audits

Why PAIG Is Essential for RAG Systems in GenAI Applications

As enterprises continue exploring the potential of GenAI and RAG systems, robust security measures are becoming increasingly critical. PAIG offers a comprehensive, flexible, and open-source solution that addresses the complex access control challenges inherent in these technologies. By implementing PAIG, organizations can confidently innovate with GenAI, knowing that their sensitive data and intellectual property are protected by best-in-class security measures.

If you are interested in a deeper conversation on PAIG you can schedule your demo here or you can sign up for our self-service trial here.solution architect.