Generative AI (GenAI) is a game changer for most, if not all, business functions. However, its largest impacts will be found in customer operations, marketing and sales, software engineering, and R&D. Beyond this, GenAI has tremendous potential to impact how workers do their jobs. Given these diverse use cases, and that many involve either sensitive or PII data, there needs to be a way to protect the sensitive data that flows into large language models (LLMs) and ensure only appropriate data is shared with GenAI application users.

Key Benefits:

- Securely Innovate

- Protect PII

- Prevent IP Leakage

- Continuously Monitor and Audit

Securely Innovate

GenAI offers enterprises the chance to differentiate their businesses and transform the way workers do their jobs. The productivity value should not be underestimated. However, organizations need to leverage GenAI security and governance frameworks to avoid impacting corporate reputation or causing compliance issues. To securely innovate, organizations need the ability to detect inappropriate sharing of content, potential security threats, such as unusual user behavior or brute force attacks, and enable swift response mechanisms.

Protect PII

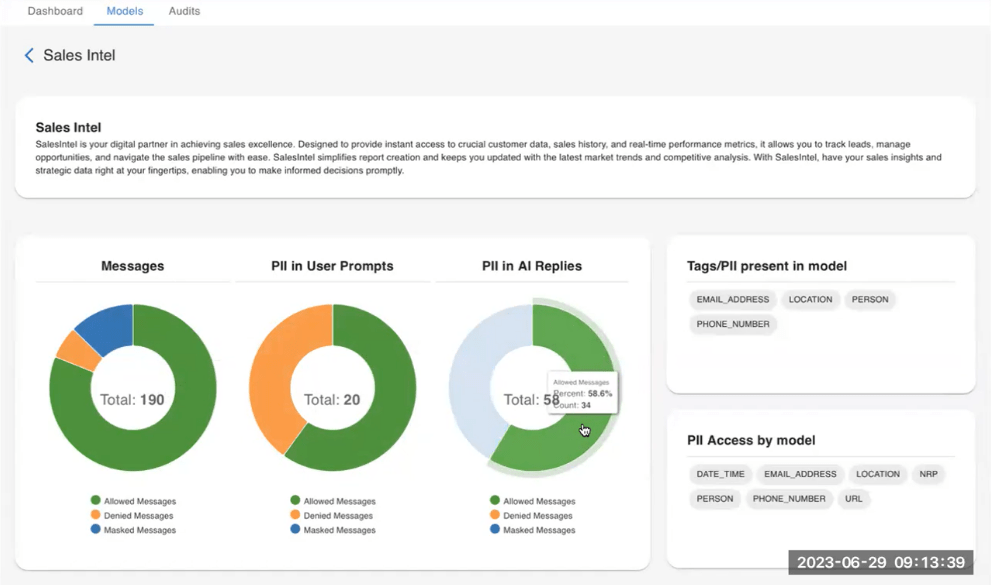

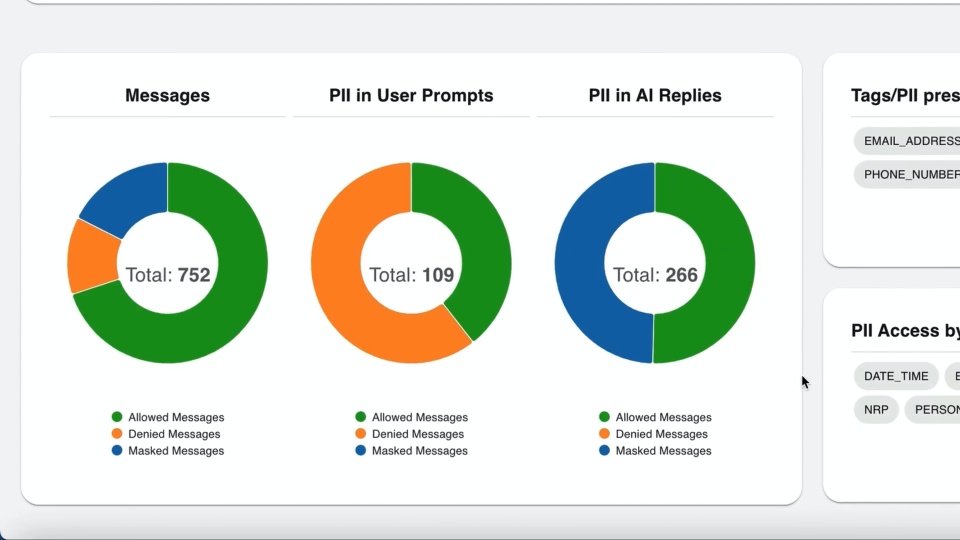

The value of LLMs and associated vector databases and embeddings are dependent on the data used to train them. As such, PII data will often be part of the training or embeddings, requiring GenAI applications to become user and context aware to ensure only authorized users can see relevant PII data. GenAI applications need to be able to identify PII data attributes in user prompts as well as application responses and allow, deny, or redact such elements based on the access policies and controls in place. This ability naturally supports regulatory compliance, addressing privacy, security, and ethical implications, including GDPR and CCPA mandates.

Prevent IP Leakage

GenAI Solutions have the opportunity to completely transform the speed at which data is provided to decision makers. However, the data provided for training or embeddings can be sensitive in nature and have a real business impact if shared internally with unauthorized users or outside the organization. For this reason, GenAI systems need to be able to prevent unauthorized users from loading sensitive data unless the user is explicitly authorized to do so. Similarly, the system must have the ability to prevent GenAI from leaking intellectual property or other sensitive information.

Track and Audit

The GenAI governance solution should maintain comprehensive, securely accessed audit trails across all apps and models. This includes the ability to audit prompts, responses, and actions taken in order to provide accountability and transparency.