Introduction

In today’s data-driven world, organizations are increasingly reliant on data to fuel decision-making, innovation, and competitive advantage. However, with vast amounts of data comes an inherent challenge: ensuring that it is both easily accessible and securely governed. In this landscape, data catalogs and data security governance play pivotal roles in facilitating seamless, secure, and auditable data access. By unifying these two capabilities, organizations can streamline access, enhance compliance, and improve security without sacrificing efficiency or agility.

Comprehensive data governance

The Role of Data Catalogs in the Data Stack

A data catalog serves as the central repository where metadata about an organization’s data assets is stored and organized. It is a comprehensive inventory of all the data available in an organization, along with its definitions, relationships, and attributes. In the context of a larger data ecosystem, data catalogs are critical in making data discoverable and manageable for all users. In addition to technical metadata, data catalogs often also contain elements to make it easier for data users to understand and interact with the data. For instance business glossaries provide business friendly descriptions of technical metadata to help the data consumers.

Data Catalogs – Documented Record Of Policies

Another aspect of making data usable and understandable is the policy associated with it describing how data can or should be used. For instance, a policy pertaining to GDPR could describe how customer data might be used and can be added to the catalog. The data catalogs are a perfect location for capturing these written policies because they can act as the centralized source of truth for data management. These policies dictate how data should be handled, stored, accessed, and shared across various departments. By centralizing these policies in the catalog, organizations ensure consistency, clarity, and transparency when it comes to how their data should be governed.

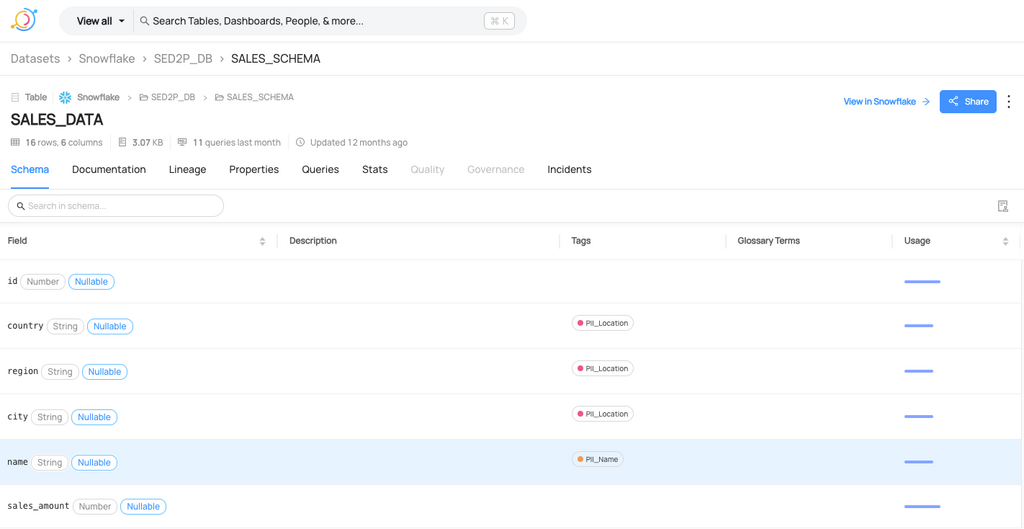

Typical data catalog view with metadata

Why Data Catalogs Are Part of a Data Governance Solution

Data catalogs are an integral part of a comprehensive data governance solution because they enable efficient management of data assets while ensuring compliance – leveraging data security and access governance tools – with internal and external data governance policies. They provide visibility, accountability, and transparency into data access and usage, making it easier to apply governance frameworks, enforce security policies, and maintain compliance across a large organization.

The Role of Data Security and Access Governance

While data catalogs focus on organizing and making data discoverable, data security governance ensures that the right people have access to the right data at the right time, and that sensitive data is protected from unauthorized access or misuse.

What Is Data Security and Access Governance?

Data security and access governance refers to the policies, practices, and tools used to control access to and protect data. I.e. where the data catalog contains a documented version of a policy, data security governance is about enforcing the controls and rules relating to the policy. It is designed to ensure that only authorized users or systems can access sensitive data while enforcing regulatory compliance requirements. This involves establishing clear access controls, monitoring data activity, and implementing data masking, encryption, and auditing.

What Data Powers Data Security and Access Governance?

Data security governance is powered by the metadata and information captured in data catalogs, which can include:

- Data classifications (e.g., Public, Restricted, etc.)

- Data lineage

- Access control policies

- User roles and permissions

- Audit logs

Key Information Captured by Data Catalogs Relevant to Data Security and Access Governance

A robust data catalog captures crucial information necessary for data security and access governance including:

- Data classification: Labels or tags that categorize data based on sensitivity (e.g., public, internal, restricted, classified).

- Business metadata: Includes details like ownership and purpose.

- Technical metadata: Details about the data such as table name and column name.

- Data lineage: The tracking and visualization of the flow and transformation of data as it moves through an organization’s data systems.

- Data access history: Information about who has accessed or modified data, when, and why.

- Sensitive data identifiers: Information about specific fields in the data (such as Personally Identifiable Information – PII or Protected Health Information – PHI) that need extra protection.

What Is the Role of Data Scanning and Classification in Data Governance?

Data scanning and classification aids in identifying and managing sensitive data that might be present in your data, ensuring compliance with data protection regulations (e.g., GDPR, HIPAA). The data scanning and classification component of data security governance involves scanning data repositories to identify sensitive data (such as PII or PHI) and classifying and tagging it accordingly. This process helps organizations automatically apply the appropriate governance policies to sensitive data, ensuring it’s properly secured.

Why Should Data Security Governance and Data Catalogs Exchange Data?

For organizations to efficiently govern data access, data catalogs and data security governance systems must share data with one another. By doing so, data security governance solutions can leverage the data catalog’s metadata (including classifications and lineage) to apply access policies and protections automatically.

For instance, if data is classified as restricted in a data catalog, the data security governance system can use that information to implement access restrictions, encryption, or masking, ensuring that only authorized users can access sensitive information. Similarly, data security and access governance solutions should share information relating to audits and user activity back into the data catalog to enable comprehensive auditing of who accessed which data, when, and why.

Why Is It Critical for compliance and security teams to connect Data Catalogs and Data Security Governance?

Compliance and privacy auditors require complete visibility into an organization’s data usage and access patterns. By connecting data catalogs with data access governance systems, auditors can:

- Trace data lineage to understand where sensitive data resides and how it is used.

- Review access logs to see who has (and they have) accessed sensitive data and whether they had the appropriate permissions.

- Ensure compliance by verifying that sensitive data is being handled in accordance with organizational and regulatory requirements.

A unified approach to data cataloging and data security governance provides auditors with a single source of truth, making the audit process more streamlined, transparent, and accurate.

The Pitfalls with Data Access Management using native cloud controls

Many organizations approach data access and security by simply using the native controls provided by a database or cloud platform. The challenge these organizations then face is that this leads to inefficiencies, compliance risks, and security gaps. Some of the key problems with siloed data access and security governance solutions include:

- Slow Data Access Provisioning:

- End users often spend too much time waiting for access to the data they need. This is because provisioning is done through manual processes or disconnected systems that don’t share data about permissions or access controls.

- For example, if a new employee needs access to data spread over AWs S3, Redshift, and a Databricks table, then that requires the creation of the access controls and permissions one system at a time using vastly different user interfaces and paradigms.

- Cumbersome Access Control Processes:

- Platform teams waste significant time chasing down approvals and enforcing access control manually. Without integration between catalogs and security systems, this becomes a tedious and error-prone process.

- For example, the native controls in each database creates controls at a resource (table, database, file, S3 bucket) level. If a new dataset is loaded that contains CLINICAL RESEARCH data, the administrators will have to manually adjust each user’s permissions (one database at a time) that is allowed to access CLINICAL data.

- Manual Sensitive Data Identification:

- Identifying sensitive data, like PII or PHI, is often done manually, making it prone to human error and inconsistencies. Incomplete or inaccurate classification can lead to data breaches or compliance violations.

- For example, data lakes (such as AWS S3) are often used as a storage or dumping ground for data. In many cases data, security and privacy teams have no easy way to know what sensitive data might be located in these repositories.

- Tracking Sensitive Data Is Difficult:

- As sensitive data is copied or moved between tables, the process of identifying and securing it is repeated, creating inefficiencies and leaving room for security vulnerabilities. Manual processes are particularly prone to error in this regard.

- For example, due to the coarse grained native controls for AWS S3 (IAM roles) any person with access to a bucket can see everything. One way organizations mitigate that is to make a copy of the data into another bucket and provide access to the new bucket. The proliferation of sensitive data increases risk across the entire data estate.

Introducing Integrated Privacera/Catalog Solution

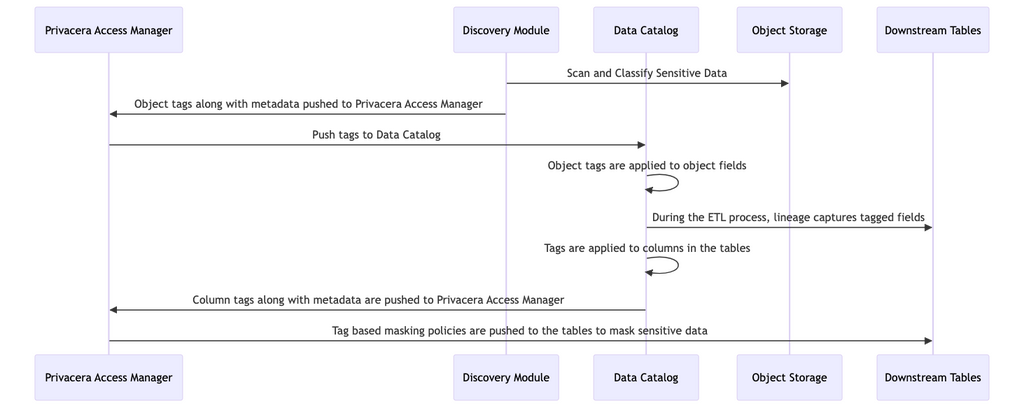

To address these challenges, integrating Privacera with your data catalog, such as DataHub, creates a unified solution that streamlines data access, security governance, and auditing. Here’s how the solution works:

- High-Level Classifications: A small set of high-level classifications (e.g., Public, Internal, Restricted, Classified) are applied to data within the catalog. These classifications are then imported into Privacera, which uses them to enforce access control policies.

- Simplified Access Control with Policies: By combining data classifications with user groups and user attributes, you can create simple attribute based policies to manage access across the organization. A single policy can provide conditional access to all tagged data, simplifying administration.

- Sensitive Data Discovery: Privacera uses its discovery tool to automatically classify sensitive information at the field level (e.g., PII, PHI, company-specific identifiers) during data ingestion into the data lake (e.g., S3, ADLS, or GCS).

- Catalog Integration: These classifications are then synchronized back into the data catalog, which maps the tags to the relevant data fields. Data lineage in the catalog ensures that the tags follow data as it moves through the system, from storage to processing to reporting.

- Automated Data Masking: Using these classifications, policies can be set up to automatically mask sensitive data at the column level. This ensures that users with appropriate permissions can access the data they need, while sensitive information is protected.

- Auditing and Reporting: Privacera’s auditing capabilities allow organizations to track and report who is accessing sensitive data, what they are accessing, and whether the access was compliant with the organization’s security policies.

Architectural view of data catalog and data security and access governance integration

Conclusion

In an era of increasing data complexity and regulatory scrutiny, unifying data catalogs and data security governance is necessary. By integrating these capabilities, organizations can streamline data access, ensure robust security, and maintain auditability without sacrificing efficiency. Solutions like Privacera, when combined with a data catalog, create a seamless flow of data governance and security, making it easier to manage sensitive data while staying compliant and secure. With the right approach, organizations can unlock the full potential of their data while minimizing risk and maximizing trust.

To get a firsthand view of Privacera, please schedule a demo and conversation with a solution architect.